Notes by Howard Gardner

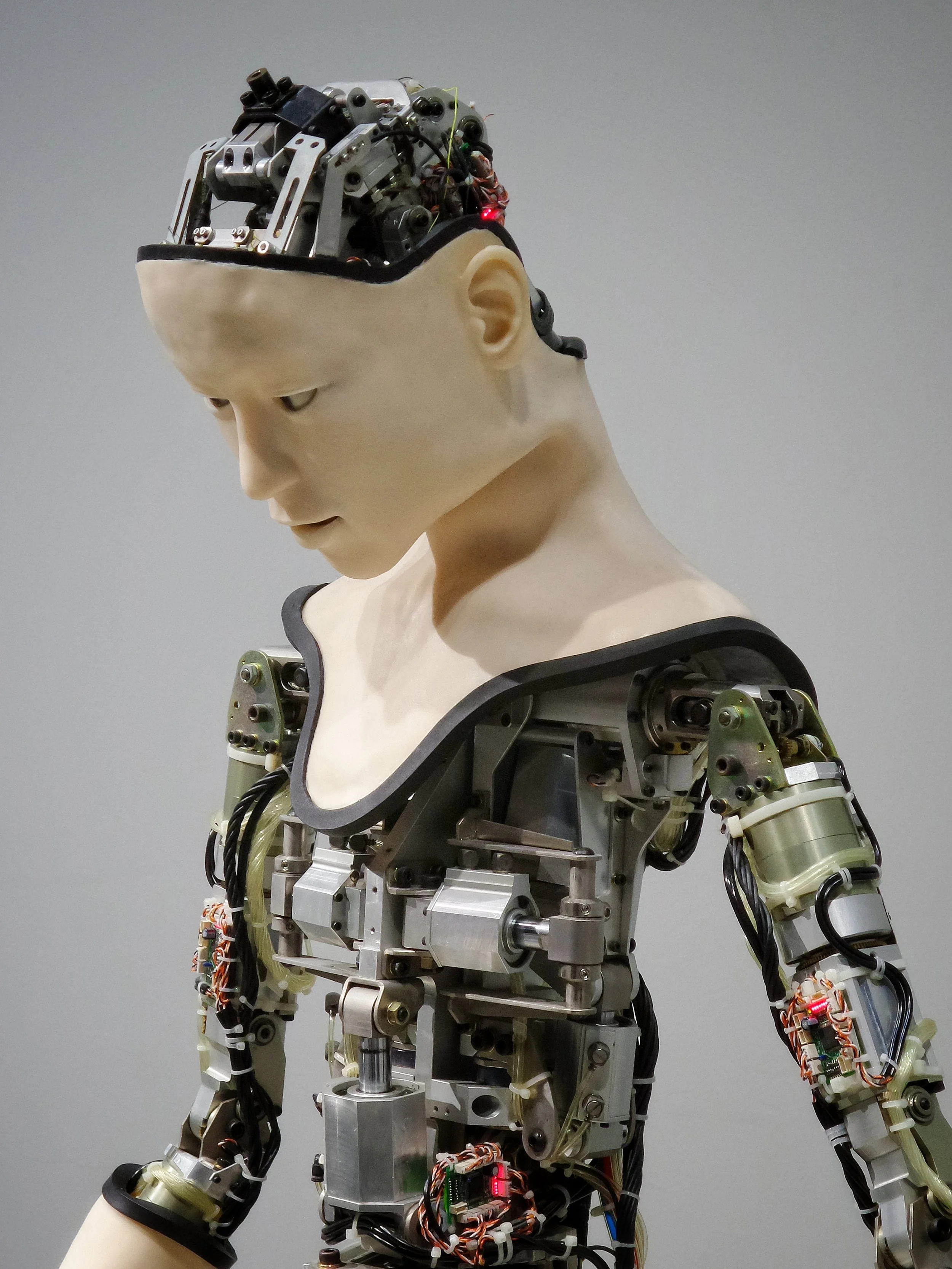

This article describes a new Research Center that will focus on threats to the very existence of mankind, what it terms ‘existential risk.’ Of course, the existence of our species has always been at risk, due to meteors, a new ice age, a meltdown of the oceans, plagues from the natural world (influenza) and plagues from the world of human cultures (wars). What is new is the imminent threats from human inventions like artificial intelligence or biotechnology, that could easily get ‘out of control.’

What caught my eye was the term ‘existential,’ since I speculated some years ago that there might be a 9th form of intelligence, what I call ‘the intelligence of big questions.’ Truth to tell, I had in mind questions about our own lives (what meaning does our life have if I know that I will die?) than questions about the survival of the species, but both can be well described as exercising ‘existential intelligence.’ In the article, philosopher Price says that a constant in human nature has been ‘human intelligence’ and that phenomenon is now going to change in the coming centuries. I am left with the question of whether our current, biologically evolved ‘existential intelligence’ is equal to the task and, if not, how technological or biological innovations will be brought to bear on the future of our species, or of its successors.

To read the article in its entirety click here.